What follows is a whirlwind tour of an area of programming usually only whispered of and seldom explored, perhaps for good reason. Lock-free techniques allow multiple threads to work together in a non-blocking way, often achieving incredible performance. As the name suggests, locks are not used. If the idea of a multithreaded program without mutexes makes you uncomfortable, you are quite sane.

Yet lock-free systems are pervasive. Some memory allocators rely on a lock-free radix tree at their core, such as jemalloc. If you have used a queue or channel to communicate between threads, there’s an excellent chance the underlying structure was lock-free. If you have used a language with atomic reference counters, there are lock-free techniques employed throughout the lifetime of objects that use them. Even high-performance databases that avoid the overhead of concurrent structures in their data path still usually rely on a lock-free queue of some sort to communicate across threads.

Most respectable engineers know to avoid lock-free techniques at all costs. Just like threading, distributed systems or any of the myriad human productivity sinks that are better sidestepped with a refactor or more powerful hardware. If you can use one thread, you should.

Single-threaded systems are infinitely easier to reason about, will have fewer bugs, and usually have better per-thread performance anyway due to not needing to block on a mutex or disrupt CPU progress with memory barriers. When you must use concurrency, you can sometimes get comparable or better performance than lock-free techniques by using fine-grained mutexes.

As someone who finds bugs in systems for fun and profit (occasionally successfully!) let me be clear:

Artisanal lock-free algorithms are symptomatic of a long chain of bad decisions. They are almost impossible to correctly write. As far as I can tell, there are only two people who actually know how to write them. These algorithms are extremely difficult to reason about, and you will be forever haunted by the real possibility that you missed some critical assumption.

Lock-free algorithms often require knowledge of obscure tooling to verify, and usually violate the single-writer principle. If you’re lucky enough to have found a bug you created, it could be quite challenging to reproduce it. Sometimes in mailing lists you see stuff like “I ran it for 38 hours on a test cluster and it didn’t blow up, but I just thought of this theoretical edge case I haven’t been able to reproduce yet and I think may be possible.” (I’ll cover the issues of exhaustive and deterministic testing in a future post featuring using ptrace and some tricks I found in a shady exploit crafter’s toolbox to tease out bugs!)

So, without further ado, let’s ignore our better judgement and light ourselves on fire!

Lock-Free 101

In lock-free programming, we don’t use mutexes. Too safe, they say. Too luxurious an abstraction for the bleeding edge. Some folks go so far as to intentionally trigger race conditions or dangling pointers just to get high on performance. The depravity in this world runs deep…

Roadmap

Atomic operations will be our gateway drug. We will advance through spinlocks, stacks and transactions. A typical tale of overindulgence before crashing hard, being dealt a harsh lesson in the ABA problem and finally ending up in a 8-step responsible implementation program.

Please note that some of my examples come from the world of distributed systems, which I am more familiar with, although the (utterly depraved) mindset is generally similar.

Atomic Operations

Atomicity, for the purposes of this article, can be interpreted to mean indivisible, uninterruptible, and either 100% successful or 100% unsuccessful. It is impossible to witness a half-complete atomic operation. Examples of things that are atomic are database transactions (but not always as you expect), the mv command found on most unix-like systems, breaking a glass window (it’s either broken or not, never something in-between), etc…

Several of the most popular CPU architectures have instructions that let you atomically set memory to a new value conditionally if you know the current value. This is called “test and set” (TAS), “compare and swap”, or “compare and set” (CAS). The hardware makes sure that only one thread “wins” if several threads attempt a CAS at the same time. All others are unsuccessful. The return value varies across implementations, but a good implementation clearly indicates success or failure.

CAS(variable, old, new) is the shorthand I will use. If variable is set to 0 then CAS(variable, 0, 1) would succeed, as long as another thread didn’t change the value while we weren’t looking. Then CAS(variable, 1, 0) would set it back. But CAS(variable, "hot garbage", 0) would not work unless one of our thread friends has given variablea surprise hot-garbage makeover ;) If several threads try to do CAS(variable, 0, 1) at the same time when the value was set to 0, only one of them will succeed.

The amount of data that CAS can operate on is usually limited to the “word size” of the system it’s running on, which is usually the same as the length of a memory address. F̶o̶r̶ ̶x̶8̶6̶_̶6̶4̶,̶ ̶y̶o̶u̶’̶r̶e̶ ̶s̶t̶u̶c̶k̶ ̶w̶i̶t̶h̶ ̶a̶t̶ ̶m̶o̶s̶t̶ ̶6̶4̶ ̶b̶i̶t̶s̶ ̶o̶f̶ ̶c̶o̶m̶p̶a̶r̶a̶t̶i̶v̶e̶ ̶p̶o̶w̶e̶r̶. (edit: x86_64 actually has a 128-bit CAS, although it’s not available from languages like Go, Java, or Rust. Thanks robgssp!) 32-bit architectures often have a double-word “DCAS” that can work on 64 bits. But one word is usually sufficient for use with pointers, counters, bit-packed headers, and all kinds of interesting stuff.

One sees some really creative uses of space in the lock-free world, it reminds me of the clever tricks people do in the Demoscene to squeeze every last drop of functionality out of a single bit. But this often imposes limits on portability. I’ll touch on some creative ways to use the few bits CAS can compare in the Mitigating ABA section below.

And yet, CAS is an extremely powerful primitive! More than enough rope to hang ourselves with! Let’s build a teetering tower of abstractions that could collapse at any second with the slightest misplaced assumption!

A Simple Spinlock

We can gaze into our past lives of locked leisure by implementing a spinlock in just a few lines. This is not a lock-free algorithm because threads will block until the lock is acquired, but it will illustrate the idea of threads coordinating by using atomic operations. We will spin in a loop until we can successfully change a lock variable from unlocked to locked. Only one thread will be granted exclusive access at a time! Assuming we didn’t accidentally create a spy…

Even though a spinlock is burning power in a tight loop until it succeeds, it actually is sometimes preferred over a traditional mutex. Traditional mutexes put a thread to sleep when they block, increasing the minimum latency for acquiring them from another thread. Modern mutexes are often a hybrid approach between a spinlock and a traditional mutex. Hybrid mutexes will attempt to acquire the lock quickly in userspace using atomic operations, without giving away their kernel-scheduled appointment on the CPU core. If the gambit didn’t pan out, the hybrid mutex will put the thread to sleep with a blocking syscall.

Many real-world implementations of spinlocks for x86 will issue a PAUSE instruction while spinning, which improves their efficiency dramatically. In addition to spinlocks avoiding the kernel scheduling overhead of going to sleep and waking up again, we also avoid CPU frequency scaling slowdowns that can happen while blocked on a mutex, so when we enter our critical section we don’t need to pay a start-up tax.

There are still some cases when you would prefer a straight-up spinlock, like if you know the expected time to acquire the lock is less expensive to you than the overhead of a modern hybrid mutex, or if you want a piece of code to be extremely responsive at the cost of some other system resource like power. But as the expected time to acquire the lock goes up, the balance shifts in favor of the mutex (mutexes in most popular threaded languages use the hybrid approach today).

A Treiber Stack

A Treiber stack will be our first true lock-free structure! It looks a lot like a linked list, with a head that points to the tip and nodes that point to the next node. We can push things into a lock-free stack like this:

- Create a node (effectively the same as a linked-list node)

- Read the current

stack.headand set ournode.nextto it. - CAS the stack’s head from the head to our new node. If it worked, we’re done! If not, GOTO 2.

Popping the stack is similar:

- Read the current

stack.head. If it’s not set, either retry or return nothing depending on if you have blocking or non-blocking semantics. The example below is non-blocking, and returns nothing. - If head is set, we try to pop it. Try to CAS the stack’s

headto itshead.nextvalue. If it worked, return the now-severed head! Otherwise GOTO 1.

Spin Spin Spin

If another thread either pushed or popped a node after we read the head value but before we performed our CAS, then our CAS will fail because the current value is no longer what we read before. We’ve been had! But we can just spin until we succeed or crash from the bug we accidentally wrote.

This is a common theme in lock-free algorithms: spin until successful. This means that if a system has high contention (threads competing for the same resource), or spends lots of time doing things that look like spinlocks, a lock-free algorithm could be far less efficient with CPU resources than a mutex that puts blocked threads to sleep. If lots of threads are looping and throwing away work that they do, another approach may work better.

Maybe this is a good place to stop. Let’s give up and return to our cozy mutex-protected hometown, more experienced, and appreciative of its simple beauty.

No More Waiting

But our life has only recently taken a turn for the worst, and it would be a shame not to see this one through to rock bottom. Wait-free algorithms, a subset of lock-free algorithms, guarantee bounded time execution. If your algorithm involves atomic variables and a bounded number of steps, you‘ve got a wait-free algorithm on your hands!

A simple one is incrementing and decrementing an atomic reference counter. This is what Python, Swift, and sometimes Rust use for keeping track of objects shared by multiple threads that need to be destroyed exactly once when all threads are done. This is a simple form of garbage collection!

The use of a constant number of instructions (1 in the case of incrementing or decrementing an atomic reference counter) is actually an example of a particularly strong type of wait-freedom known as “wait-free population oblivious” where the number of steps we take in our code is not dependent on the number of threads participating. Other wait-free algorithms sometimes work by trying to complete the work of a bounded number of other threads, and that bound could grow or shrink as the number of participating threads changes.

The Value of Reliable Latency

More complex wait-free algorithms are often a bit slower than lock-free counterparts when there’s no contention, but under high load they can weather the storm with predictable latency, making them an ideal choice for use in real-time systems.

Reliable latency is particularly important for building high-performance systems, even when it means higher average latency. If there is a rare, super slow event, it can cause backpressure to whiplash through large swaths of the system and in practice you’ll get blasts of failures, like connection errors as the TCP backlog quickly fills up. People who have managed redis at high scales are often too familiar with the devastating effects of a single very slow command on their overall system’s reliability, because it stops all other commands from being handled until the slow one is complete. With concurrent algorithms, it’s often a similar story.

In the last few years, folks have found a nice balance between usually-fast lock-free and reliable wait-free algorithms by attempting the lock-free version at first, and falling back to the wait-free version if it doesn’t pan out. This reminds me of the compromise found in modern hybrid mutexes to improve the flexibility of the implementation.

Lock-Free Transactions on Multiple Items in a Tree

A technique sometimes used in databases and filesystems is shadow paging. Sometimes we want to atomically update multiple items stored in a tree structure. The basic idea is:

- read the pointer to the root

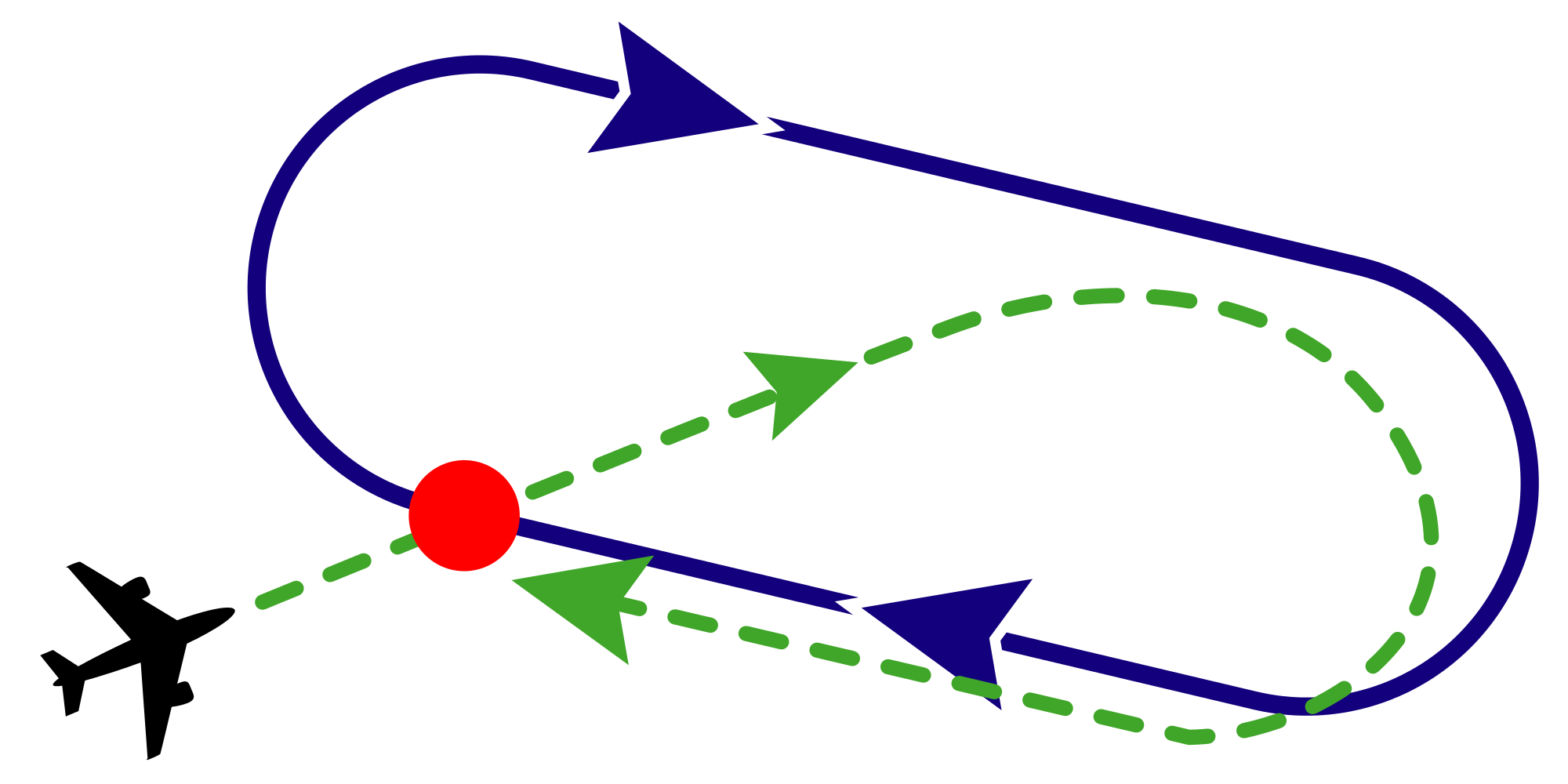

- copy the things we want to change into new tree nodes, then go up the tree creating new (copied) nodes that reference the previous copied and changed level, going up the tree until we reach the root. All of this is done without changing the original tree.

- Finally, we CAS the root to point to our changed pages.

- if the CAS worked, our multi-item transaction was successful. if not, we either retry or propagate an error to the next higher level of our system.

This copy-on-write technique is quite useful in some lock-free systems, but it can involve excessive copying. It’s fairly rare that this technique is a better choice than using fine-grained reader-writer locks on multiple items in a tree. Still, a good trick to have in our spellbook for transactions on a small number of shared items.

There are a number of interesting lock-free transaction techniques that work on larger datasets, but they are a little more complicated. If you’re curious I suggest starting with this one. Note that these are actually algorithms from the distributed systems world, which is similar to lock-free in many ways, differing mostly in terms of latency and reliability. If it works in a distributed system, it will work on a single system, but may totally be overkill.

Common Problems in Lock-Free Programs

The ABA Problem

5 == 5 — 1 + 1

One may assume “if our CAS succeeded, nothing has happened since we read the old value”. This is only true for monotonic values like a counter that you only increment. But if you can increment AND decrement the counter, all hell breaks loose. If a non-monotonic value was 17 one minute ago and it’s 17 now, there may have been a bunch of random increments and decrements in the interlude. Or maybe a single operation that caused the value to wrap.

If you’re using a 64 bit counter, and you’re incrementing it once every second for every human on earth, in less than 100 years your counter will reach the maximum 64 bit number and wrap back to 0 when the next addition occurs. If you’re using a 32 bit number, you have less than one second of sanity. Don’t spend it all in one place!

This is problematic to the extent that we conflate equality with a lack of change. In the next section I’ll show you a gnarly scar I got from making this mistake.

ABA and Pointers

Very often, we use CAS operations on pointers. If we can rely on a strong GC system, we don’t need to worry about dangling pointers, and it can be simpler to build more complicated lock-free structures in Java or Go compared to C++ or Rust because of this.

Pointers are not monotonic!!! Our memory allocators often will put a new memory family in the same memory house when the old one moves away to memory heaven (or memory hell if they were bad and spent their lives writing lock-free algorithms).

This really happened to me:

- I put a 48 byte structure into a lock-free stack that represented the history of a shared piece of state

- A thread read the

stack.headfor the “history stack” - I deleted the structure and set the history stack’s

stack.headto null. I freed all of the objects that were in history, including the object from step #1 - I created a new history stack for the object, and allocated a new 48 byte structure. The memory allocator chose the same offset as the original object in step #1, because it was a good fit.

- the thread from #2 looked at the old history stack and decided it should attempt to augment that history

- the thread from #2 created a new update whose correctness was dependent on the history being unchanged

- the thread from #2 did a CAS operation on the new stack, which represented significant changes. The CAS worked because the address was the same as when the read occurred in step #2. My tests did not catch this bug.

- I went to memory hell for a long time until I understood the nature of my memory sins. It was messed up.

This was actually two bugs: ABA and a dangling pointer that was left referring to invalid state. I assumed my CAS protected me from caring about it as long as I never dereferenced it, but I was dead wrong. If you’re curious, I was trying to implement a similar system to the one shown on slide 11 from this presentation on Bw-Trees.

Common Safeguards

Mitigating ABA

There are a number of techniques for avoiding ABA!

- Don’t write lock-free algorithms. It’s really a no-brainer. Don’t do it.

- Add some extra stuff to the data that we put in atomic variables. Use 16-bits to store a quasi-monotonic counter that you bump by 1 each time you modify the shared state. How many bits of state do you really need? 64-bit linux actually uses 48 bits for addresses (256 TB of address space, but this is reconfigurable if you are baller AF), so you have 16 bits free with pointers anyway. If a structure takes up 2⁵ bytes each, and it’s allocated at aligned addresses, then you have 5 bits of free state in the “low-bits” to use for even more tag data. Just don’t forget to zero those bits out before you dereference the actual pointer!

Mitigating Dangling Pointers and Use After Free

In addition to ABA, we have new challenges in managing our memory, now that multiple threads may be reading and mutating our shared state concurrently.

When we remove an item from a lock-free stack, for example, there may be threads that read the node just before we detached it! Those threads may still be operating on the now-unreachable state. So after we remove the node from the stack, we must wait until we know that all threads are done reading it before using the memory for something else.

This is a tricky problem, but luckily there are some good ways to reduce the bleeding.

- Use a language with GC. This eliminates these bugs by design. Plenty of good choices out there. Specialization is for insects! Go learn Java, you C++ hipsters! Rust’s guaranteed object destruction without GC doesn’t cover your ass in the lock-free world (but check out the first links in #2 or #3 for nice solutions).

- Epoch Reclamation has threads check-in to an “epoch” before they access shared state. When they stop working on shared state, they check-out of that epoch. When state is made unreachable, and you want to delete it, you have to wait until any thread that COULD have seen it has moved on. For each object we want to free, we add it to the current epoch’s garbage bag (this could be a later epoch than the one we initially checked into). Only after all threads have checked out of epochs before or equal to the one for a particular garbage bag can we actually free the data. This is how I fixed the bug I hit in the ABA and Pointers section. This is used extensively in the linux kernel’s RCU system.

- Hazard Pointers are similar to epoch reclamation but they operate on a more fine-grained level. This can be good for keeping latency per reclamation lower, but it will drop the throughput of the system.

Making a Lock-Free System For Real

So, you want to use this stuff in production. The reliability consultant in me delights. Go forth. Build a bonfire. But seriously, this takes a real investment. Like distributed algorithms, there are tons of subtleties here that are easy to miss, even by experts. Here is a responsible path to take if you choose to do so:

- Use a popular, well-tested existing library for as much as possible. Ideally one known to be running at very high scales at one of the infrastructure / financial giants in correctness-critical paths, not just some low-risk internal subsystem.

- If you must implement something on your own, scour the literature for known solutions. Opt for the ones that are a little older with high citation counts. New algorithms described in papers in this field are sometimes found to contain subtle bugs.

- Understand by modeling before implementing. Write an Iris, SPIN, TLA+, etc… model describing the algorithm to make sure you really understand what’s going on. You will usually save a lot of time by modeling it before jumping into implementation. Check out this guide for getting started with TLA+! It’s weird and fun.

- Follow the cleanroom methodology while implementing it.

- Find a way to deterministically and exhaustively evaluate all interesting thread interleavings of your implementation. Lots of bugs will jump out if you can do this! Just running a test workload for a few weeks on a small test cluster is not representative of what will happen on diverse hardware running diverse workloads and causing threads to be scheduled in diverse ways. This is the holy grail! Start your implementation from the beginning with this goal in mind, and build things into it that make testing in this way easier. (and stay tuned for a future post on this subject)

- Use the LLVM sanitizers extensively while exercising interleavings in the code, if your programming language supports them or similar tooling. They will slow down your program a little bit, but will detect all sorts of bugs that may not break your tests. They can find race conditions, memory leaks, use-after-free bugs, buffer overflows, all sorts of juicy stuff. When Hanno compiled a standard linux system to run the sanitizers on his entire system, he found and fixed an astounding number of bugs in several programs you may rely on every day.

- Remember that by learning about specific bug classes, you may actually become MORE vulnerable to falling victim to them! We have a tendency to be overconfident about our ability to deal with problems we’ve learned about.

- Don’t write lock-free algorithms. You will die.

Thanks for reading! This is my first blog post, and I’d appreciate any feedback that would improve the experience for future articles! I’d like to give special thanks to those who defended readers against some of my egregious and irresponsible use of language, in (possibly buggy) alphabetical order: Alex Laties, Casey C, daiyi, Gabe Conradi, Matthias Nehlsen, Peter Kolloch, Philipp Muens, Sargun Dhillon, Sassan F, and Steve Salevan, thank you so much!

Tyler Neely

Tyler Neely